AI Model for Diagrammatic Abductive Explanations

KEY INFORMATION

TECHNOLOGY OVERVIEW

As the world continues to make strides in artificial intelligence (AI), the need for transparency in the field intensifies. Clear and understandable explanations for the predictions of AI models not only enhances user confidence but also enables effective decision-making.

Such explanations are especially crucial in sectors like healthcare where predictions can have significant and sometimes life-changing consequences. A prime example is the diagnosis of cardiovascular diseases based on heart murmurs, where an incorrect or misunderstood diagnosis can have severe implications.

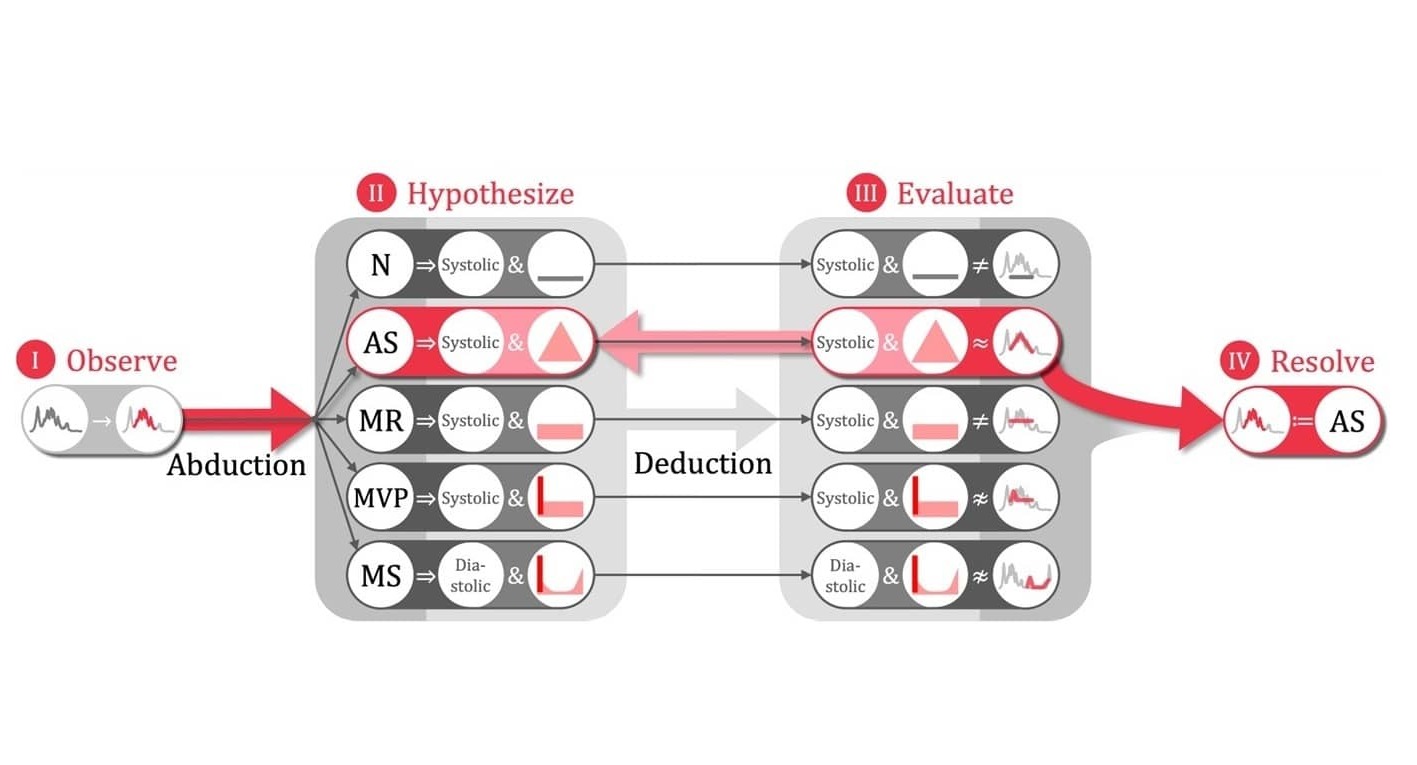

The technology, DiagramNet, is designed to offer human-like intuitive explanations for diagnosing cardiovascular diseases from heart sounds. It leverages the human reasoning processes of abduction and deduction to generate hypotheses of what diseases could have caused the specific heart sound, and to evaluate the hypotheses based on rules.

TECHNOLOGY FEATURES & SPECIFICATIONS

The technology tests which murmur shapes are present in the heart sound to determine the underlying cardiac disease. This approach of abductive-deductive AI reasoning can also be applied to other diagnostic or detective tasks.

DiagramNet uses deep learning AI to perform four key steps:

- ‘Observe event’ by observing displacement to interpret its amplitude, murmur location, and the heart phase in which the murmur occurred.

- ‘Generate plausible explanations’ by listing possible diagnoses, retrieving respective murmur shape functions, and initialising their corresponding shape hypotheses.

- ‘Evaluate plausibility’ by fitting each hypothesis to the observation, evaluating the rules in terms of shape goodness-of-fit in conjunction with matching the murmur heart phase.

- ‘Resolve explanation’ with the hypothesis-fitted inference and the initial inference to make a final inferred diagnosis.

By offering clinically relevant explanations in an accessible format, DiagramNet bridges the gap between complex AI predictions and user understanding, fostering trust and actionable insights in critical healthcare applications.

POTENTIAL APPLICATIONS

Many existing AI models struggle to provide meaningful and easily interpretable explanations—they are either too technical or too simplistic. As such, there is an opportunity for a novel AI model that can generate thorough and easily understandable explanations. In the medical field, diagrams can be particularly beneficial when it comes to illustrating complex observations and making interpretations more accessible to non-technical users and patients alike.

Unique Value Proposition

- Enhances interpretation of AI decisions through a design framework for diagrammatic reasoning.

- Accelerates and strengthens the adoption of AI technology by leveraging diagrams that adhere to domain conventions.

- Presents a diverse array of explanation types, namely, abductive, contrastive and case-based explanations.

- Facilitates trust and consistency in AI-based cardiac diagnosis by providing murmur diagrams which are a universally understood tool among clinicians.